On independent data monitoring committees in oncology clinical trials

Editor’s note:

The special column “Statistics in Oncology Clinical Trials” is dedicated to providing state-of-the-art review or perspectives of statistical issues in oncology clinical trials. Our Chairs for the column are Dr. Daniel Sargent and Dr. Qian Shi, Division of Biomedical Statistics and Informatics, Mayo Clinic, Rochester, MN, USA. The column is expected to convey statistical knowledge which is essential to trial design, conduct, and monitoring for a wide range of researchers in the oncology area. Through illustrations of the basic concepts, discussions of current debates and concerns in the literature, and highlights of evolutionary new developments, we are hoping to engage and strengthen the collaboration between statisticians and oncologists for conducting innovative clinical trials. Please follow the column and enjoy.

Introduction

This article addresses clinical trials in oncology that require independent data monitoring committees (IDMCs). The word “independent” emphasizes that members of these committees are neither part of the sponsor organization nor trial investigators. Typically, trials in oncology with IDMCs are multicenter, large, and of long-duration. The usual outcome is death, progression-free survival (PFS), or sometimes response rate. Some are smaller trials where outcomes may be tumor size or biomarker data. Ideally, controlled clinical trials are double-masked; however, in oncology many such trials are necessarily open-label or single masked. Some trials with IDMCs may be single-arm studies. The rationale for an IDMC stems from the ethical compact between those running the trial and the participants in it to ensure the protection of the participants in the trial, the sponsor’s regulatory requirement for reporting adverse experiences during the trial, the sponsor’s financial incentive to end the trial early if the drug has no effect or if it confers dramatic benefit, and the investigators’ desire to disseminate new scientific information as soon as it becomes reliable. The IDMC, a committee charged with monitoring the safety and efficacy of the new treatment and the progress of the trial, is in the ideal position to perform such tasks assuming that members are independent of the sponsor and have no vested interest, either scientific or financial, in the trial’s results or in its continuation.

Groups other than the IDMC monitor safety as well. The investigator, who is the closest to the participant, observes the participant at the bedside or at the protocol-defined clinic visit. On noting an unexpected serious adverse event that appears to have been caused by the study drug, the investigator has a regulatory obligation to report that event to the sponsor. Most industrial sponsors employ a so-called “pharmacovigilance” team responsible for reviewing adverse events (AEs), especially those deemed to be “serious”, to assess the safety of the experimental treatment. The local institutional review boards (IRBs) or ethics committees (ECs) review information about the events that occur at their site. Although these three mechanisms—the investigator, the pharmacovigilance group, and the IRB/ECs—can identify many worrisome AEs, their lack of knowledge of the entire experience of the trial and the fact that many studies are masked make it difficult to attribute an adverse event to an experimental treatment. In a masked trial, the investigator, the pharmacovigilance group, or the IRB/EC may observe a surprising and worrisome event, but they do not know whether the event occurred in a treated or a control participant. Furthermore, the investigator and the IRB/ECs do not know how many of these events have occurred in the trial as a whole. Only an IDMC, unmasked to the treatment allocation and aware of all events that have occurred, is positioned to see all the data by treatment group.

Regulations, guidances, and standards

Clinical trials have used IDMCs of one form or another for decades. In 1979, for example, the Clinical Trial Committee Guide of the National Institutes of Health (NIH) in the United States stated, “..every clinical trial should have provisions for data and safety monitoring.. Monitoring should be commensurate with risks.” (1). The 1980s saw many models for data monitoring; the practices in the United States and elsewhere differed somewhat and the approaches differed by disease. In the early 1990s, the various institutes of the NIH sponsored a conference on data monitoring boards at which people shared very different experiences (2). In 1994, the NIH Committee on Clinical Trial Monitoring stated, “..all trials, even those that pose little likelihood of harm, should consider an external monitoring body” (3). Currently, NIH-funded trials require a data safety monitoring “plan”, as distinct from a data safety monitoring “committee”; sometimes that plan will involve external independent experts, and sometimes it will consist solely of internal members. Several guidances govern the practice for trials in the regulatory setting (4,5).

The International Conference on Harmonisation Guidance E6 (6), Section 5.5, says that IDMCs should assess the progress of a trial, both in terms of safety and efficacy, and should make recommendations to the sponsor as to whether to continue, modify, or stop a trial. Guidance E9 (5) states that the IDMC should have written operating procedures; it should maintain records of its meetings and the interim results it reviewed. At the end of the study these records allow investigators, the sponsor, and regulatory agencies to assess the basis for the IDMC’s decisions. The guidance stresses that the independence of the committee, by controlling the sharing of information about the comparative arms, protects the integrity of the trial from negative impact of access to information about the ongoing trial. To that end, the charter or the operating procedures of the IDMC should clearly define the sponsor’s role with respect to the IDMC and should address the control of the dissemination of interim results within the sponsor organization. Guidance E9 (section 4.5) stresses that the IDMC may recommend stopping the trial if the data clearly establish the superiority of the treatment, if the data show that demonstration of a clinically relevant difference in treatment has become unlikely, or if unacceptable AEs are apparent.

The recommendations of the Data Monitoring Committees: Lessons, Ethics, Statistics Study Group from the UK National Health Service (DAMOCLES) Study Group are similar to those in the ICH guidance. According to DAMOCLES, IDMCs should “consider the behavioural, procedural, and organizational aspects on data monitoring in randomized clinical trials (RCTs).” (7). See also Ellenberg (8) and Herson (9).

Structure of an IDMC

The sponsor and investigators must understand that IDMCs take their role seriously. Although sponsors often try to limit the IDMC’s role (e.g., by not allowing the IDMC access to data on efficacy), experienced IDMC members recognize that informed judgments require accurate, timely, and complete data. All parties in the trial should understand the IDMC’s role, for example, whether it is primarily charged with safety or whether its remit also includes monitoring efficacy. In choosing IDMC members, the sponsor should select a committee that is not too small, or the relevant expertise will not be available, but not too large, or the discussions might get bogged down and the committee may find it difficult to identify a time when everyone can meet. IDMCs may be as small as 3 people or as large as 15; in general, the more complicated the study, the larger the board. The committee is typically a multi-disciplinary body comprised of clinicians, a statistician, and perhaps an ethicist. The clinicians may all have the same speciality, but it is often beneficial for the clinicians themselves to have somewhat diverse backgrounds: an oncologist or two along with imaging and pathology specialists depending on the nature of the disease under study, the endpoints involved, and the mechanism of action of the therapeutic agent. At least the chair and the statistician should have had prior experience on IDMCs. If possible, IDMCs should include a statistical “trainee” to prepare another generation of experienced statisticians (10).

The members should be independent and disinterested (but not uninterested) in the outcome of the trial. Members should also be free of both financial and non-financial conflicts of interest, such as the stock ownership in any pharmaceutical companies, serving as an investigator for the study of interest, or holding any relevant patents.

The charter should specify the IDMC’s operating rules: what data it will see, to whom it will report, and what statistical guidelines, if any, it will adopt. It should specify whether the committee will see data masked (i.e., all lumped together, not separated by treatment group), partially masked (i.e., separated by treatment group but without identification of which group is which), or completely masked. We strongly recommend that IDMCs view the data unmasked. See Meinert for a cogent rationale for unmasked review (11).

The charter should describe the structure of the meetings. IDMC meetings usually begin with an open session during which the sponsor and the investigators present their views of the progress of the trial. The sponsor may discuss data from related trials and review recent relevant literature. It may present the IDMC with concerns raised by their pharmacovigilance group, the investigators, the IRBs/ECs, or regulatory agencies.

A closed session, attended only by the members of the IDMC and the statistical group presenting the data, follows. After the closed session comes an optional executive session restricted to the voting members of the IDMC. The meeting may end with another open session at which the IDMC reports its recommendations to the sponsor and investigators. Variations on this theme abound. Not all IDMCs hold executive sessions (although many charters recommend the IDMC to hold one because an executive session allows the IDMC to speak in confidence if it is dissatisfied with the presentation by the reporting group). Sometimes the sponsor and the investigators attend the open sessions only by telephone.

The reporting statistician

Various models are available for summarizing the data for the IDMC. Sometimes sponsors prepare the data. For example, Snapinn describes an approach that separates the function of the reporting statistician from that of the other staff members of a study team (12). Often, the organization responsible for the final report (e.g., the data coordinating center in NIH-type trials or the contract research organization in many industry-sponsored trials) presents the data. Still another model, espoused by the FDA guidance document (4), separates the reporting statistician from the study statistician. Various authors present cogent arguments for each of these views. Those who argue that the study statistician should report to the IDMC point to the knowledge that the study statistician has of the database; they claim that without an intimate understanding of the data, the reporting statistician cannot present information accurately to the IDMC. Those who believe that the reporting statistician can and should be independent of the study contend that independence does not mean ignorance; to report effectively and accurately, the reporting statistician must learn about the structure and the peculiarities of the database. In some cases, the statistician member of the IDMC reports the data. We do not recommend this model because such statistician members are no longer independent and may be put in the situation of performing analyses that support their own thoughts about the trial.

IDMCs struggle with the choice between timely data and clean data. In the perfect world the data an IDMC sees would be both timely and error-free, but such perfection is unrealistic in clinical trials. Given the choice between clean and early, we strongly recommend timely, understanding that the data may be incorrect and may be pointing toward a false conclusion. A sophisticated IDMC thinks of dirty data as a potential warning call, not as a final view.

A typical report will summarize the protocol, remind the IDMC members of the outstanding issues from the previous report, describe recruitment and follow-up, display baseline data, report on whether the randomization was performed correctly, display information on the timeliness of data and adjudication of outcome events, report information on dosing and adherence to the study protocol, and provide data on the end points measuring efficacy. Useful reports start with a one-page summary of the current results along with the results from the previous report. Typical IDMC reports deal primarily with safety—serious and non-serious AEs, vital signs, and laboratory parameters. The investigator will have declared some of the events related to study drug; however, the IDMC’s assessment of causality will generally rely on the difference between the event rates in the treated and control groups. Good reports are well organized, complete, and succinct.

The design of reports to an IDMC must account for the interim nature of the data. Reports that are merely incomplete versions of the final report cannot provide the IDMC with sufficient information for it to make reasoned judgments. The board must understand the nature of the information it is reviewing: how current it is, how correct it is, and what data are not yet available. The purpose of the final study report is to summarize the data from the study and to make conclusions about that study. The purpose of an interim report, on the other hand, is to provide data for an IDMC to recommend whether or not to change or stop a study.

Trialists disagree about whether the fact that the IDMC has met should remain known only to those with an operational need to know or whether the fact of the meeting should be available to the investigators and the IRBs. We view the advantage of openness outweighing the perceived need for secrecy. The arguments for secrecy stem from how those outside the IDMC may interpret what happened at the meeting. When an IDMC does not recommend stopping the trial after its meeting, people aware of the stopping guidelines will calculate the likelihood that the trial is meeting its goals. Especially for small-company sponsors, stock prices may fluctuate wildly immediately before and after an IDMC meeting. On the other hand, reporting that a meeting occurred and that the IDMC found no reason to change the protocol provides comfort to the IRBs.

Early stopping

Stopping a trial early is a major decision. Failing to stop when the data are compelling can hurt the participants in the trial and future patients. On the other hand, stopping a trial prematurely can doom a product or a new therapeutic intervention. IDMCs must aim for defensible decisions. Sponsors who limit the IDMC’s access to data hamper intelligent decision-making. At the end of a trial, the investigators and sponsor will analyze the data in many ways. If an IDMC has access only to limited data when it is about to make its recommendation, it is subject to error. After all, it is dealing with less information, and hence more variability, than the study planned; limiting it further by denying the IDMC information it requests renders it less able to make a reasoned decision.

Industrial sponsors, perhaps in an effort to save money and perhaps as a mechanism to prevent the IDMC from making decisions not based on unambiguous data, often limit the range of data the IDMC receives. Sponsors must trust IDMCs; sometimes an IDMC will make a serious mistake, but an experienced IDMC armed with the information it needs is less likely to make an error than an IDMC with limited access to data.

Record keeping and communications

IDMCs must keep impeccable records of their actions, especially when the sponsor intends to use the monitored study for regulatory filing. The minutes must show that the IDMC did not change its behavior in response to observed data in a way that compromises the integrity of the data. Full and honest disclosure of what happened will serve the study and future IDMCs most effectively. IDMC members should remember that interim analyses can threaten the integrity of a trial if the investigators or the participants see the interim results, if the final analyses do not account for the multiplicity of looks at the data, or if anyone involved in the operation of the ongoing trial knew the interim results. For studies in which a statistical group other than an independent group presents the data to the IDMC, the presenters must keep information about the ongoing study confidential from the remainder of the study team.

IDMCs should report as little as possible to the Sponsor. Often a recommendation to ‘continue the trial as planned’ is sufficient. Generally the IDMC should not disclose to the Sponsor requests for additional analyses, issues the IDMC feels it needs to monitor closely, and other topics discussed during the closed session. Obviously, changes to the protocol, and other issues that affect the conduct of the study should not be kept secret; however requests for additional IDMC meetings should, if possible, not be divulged to the sponsor.

Monitoring for efficacy

A well-designed clinical trial includes a clearly specified primary outcome; many people view a trial as “successful” if it demonstrates at its prespecified P value that the treatment under study shows benefit on that primary outcome. The trial may also have some secondary outcomes whose purpose is to provide supportive data indicating that the effect is true, not some artifact or chance finding. The act of specifying a primary outcome allows statistical machinery to test that outcome both at the end of the study and during its course. Over the past 50 years, statisticians have developed methods for group sequential analysis that allow monitoring for efficacy in a way that allows rigorous assessment of the primary outcome in a manner that preserves the type I error rate (see, for example, Green et al.) (13).

Monitoring for safety

Although protocols specify the most important outcome from the point of view of efficacy, in safety monitoring, the most important outcomes are often still unknown. A taxonomy of safety end points provides a structure for thinking about how to monitor for them. A drug or other intervention has some risks that are known, some that are unexpected but non-serious, others that are unexpected but serious, some that are unexpected and life-threatening, and some that, although not credible, are frightening if true.

Monitoring for safety presents statistically difficult problems. In looking for safety signals, the IDMC searches for the unknown, the rare unexpected event. Problems of multiplicity abound. Although one can, and should, specify precisely the number of outcomes evaluated for efficacy, by definition, one cannot specify the number of hypotheses relevant to safety. Instead, the IDMC must be prepared to react to surprises, turning a fundamentally hypothesis-generating (“data dredging”) exercise into a hypothesis-testing framework. Taking as our marching order Good’s aphorism, “I make no mockery of honest ad hockery” (14), we here suggest approaches an IDMC can take in monitoring safety. First, the IDMC should remember that the informed consent document that the participants signed was a compact describing the risks and benefits known at the time the study began. The IDMC should also remember that people rarely enter a trial to prove that an intervention under study is harmful; rather, rational participants trust that, if the ongoing data show more risks than anticipated at the time they signed the informed consent document, the investigators will so inform them. Because the investigator does not know the risks, the IDMC must evaluate the risks and benefits on an ongoing basis with the view toward informing the participants should the balance between risks and benefit change materially.

The known risks are the simplest for the IDMC because the role of safety monitoring for these outcomes is to ensure that the balance of risk and benefit continues to favor benefit. Even if the known risks are quite serious, the IDMC may recommend continuation if it is convinced that the patients are being well cared for and that they have been adequately informed of the risks. For example, in a trial of cancer chemotherapy in which febrile neutropenia occurs at a high rate, the IDMC examines the data to assure itself that the treating oncologists prevented the most serious consequences.

The unknown, but not serious, risks pose uncomfortable questions to the IDMC, but usually an IDMC assumes that the benefits, although perhaps not yet obvious, will outweigh these risks. The number of AEs in an oncology trial can be daunting. Not only are the patients quite sick, but the drugs used to treat cancer are often toxic. It is often difficult for the IDMC to sort through all the AEs.

Unknown, but serious, risks that emerge during the course of a study may lead an IDMC to anxious discussion. If the signal is real, the IDMC asks itself if the possible, as yet unobserved, benefit outweigh the risks. Even more troubling are those unexpected serious adverse events with dire consequences. For example, in a trial aimed at preventing mortality in breast cancer where the mortality rate is low, if the IDMC observes a small excess of stroke in the treated arm, it may take immediate action. It may ask for an expert on stroke to join the committee; it may ask for a special data collection instrument to enhance the accuracy of spontaneously reported stroke. Or, if the risk seems unacceptably high compared to the benefit seen in mortality, it may recommend stopping the trial.

Finally, sometimes an event occurs that is medically not credible but, if true, devastating. Even a very low rate (a single event perhaps) of fatal liver failure may lead to stopping the trial in tumor types with low mortality.

The IDMC has several tools for monitoring safety, especially for known events. It can set statistical boundaries for safety. Crossing the boundary means that the data have shown convincing evidence of additional harm. Sometimes an IDMC uses futility bounds for efficacy as ersatz safety bounds. It may adopt a bound that is symmetric with respect to the efficacy bounds; that is, one declares excess risk if the evidence for harm is as strong as the evidence for benefit would have been. Another approach uses a boundary more extreme than futility but less extreme than the symmetric bound. Still another approach is to establish an a priori balance of risk and benefit; if the ongoing data show that the balance has changed importantly in the direction of excess risk, the IDMC may recommend stopping the trial for safety.

If the emerging event was previously unreported, the IDMC may hypothesize that the observed risk is true but use the remainder of the trial to test whether the excess is real.

The survival nature of these trials may make the assessment of AEs more difficult than standard trials where most patients are alive at the end of study. Consider the case where one treatment works much better than another increasing survival times by a non-trivial amount. Patients on the more favorable treatment will be followed longer and likely receive more treatment. Both of these factors may contribute to more AEs being reported. In such cases, the toxicity profile of the more efficacious drug may seem worrisomely worse than the other drug if only counts and percents are compared. In trials where this occurs, looking at event rates (events per patient-year of follow-up) is important to ensure a balanced comparison between the treatment groups.

Enhancing safety data

Most safety data arrive as spontaneous reports. The data are notoriously ambiguous. The investigator writes a description of the event and reports it on a case report form. The form arrives at the data center, and someone codes it using a standard dictionary. Classification systems for events as complicated and varied as the possible collective of adverse events cannot be ideal. Some methods can enhance the quality of these data. For some events, diaries provide a systematic approach to collection. For certain serious AEs, a masked outcome committee can review the reports. If, for instance, the drug under study is suspected of causing thrombotic events, an end-point committee can review spontaneously reported events that could potentially be thrombotic. Hurwitz et al. (15), in a phase III trial studying the effect of bevacizumab on metastatic colorectal cancer, developed specialized case report forms to collect data on AEs that had been observed in earlier trials. Codifying the collection in this way can increase the accuracy and completeness of the data.

An IDMC should not rely solely on coding systems; rather, it should classify and reclassify to identify clusters of similar events. Categorization by body system may miss certain events that occur across body systems, for example, bleeding or thrombotic events or pain. The IDMC should attempt to review laboratory data looking at both means and extreme percentiles. (They should be wary of minima and maxima which are notoriously subject to error.)

IDMCS: what makes oncology trials special?

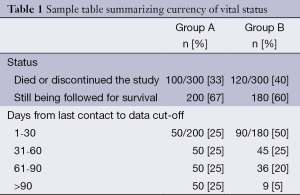

IDMCs for oncology trials are similar in many respects to other IDMCs. That said, oncology trials do differ from other trials in ways that affect IDMCs. In oncology trials, death is often the primary outcome. Even if the primary outcome is not death, the mortality rate is often very high. Whether or not survival analysis is presented in the IDMC report, the number of deaths will be provided because death is always a safety outcome. Unlike a parameter that is collected at each visit, a death is usually reported within a few days of its occurrence. Therefore, the IDMC must be careful to monitor both the number of events and how current the survival data actually are. More events may appear in one group simply because that group has been contacted more recently than the other group. In particular, if the experimental group is on a regimen that has more AEs than the control, the investigator may be in closer contact with that group. We suggest, therefore, that the report include a table summarizing how ‘up-to-date’ the death information is. Such a presentation may look like this (see Table 1).

Full table

The top part of the table shows more deaths in group B than in group A; however, follow-up in group A is severely lagging behind that of group B. Of the patients still being followed for survival, vital status was assessed within 60 days of the data cutoff for 50% of group A and 75% of group B.

In all trials, IDMCs need to decide how often to review the data. The answer to this question, which has no simple answer, may be driven by the emerging data in the trial. That said, we recommend meeting on a fixed schedule, say every 4 months, rather than at a specified number of events. A fixed schedule is much simpler operationally and allows for planning well in advance of the meetings. Of course, formal interim analyses may need to be performed in conjunction with a specified number of events but these do not occur at every meeting.

Many oncology trials have a long-term follow-up phase that participants enter after they have completed all study treatments. In this phase, participants are being monitored only for survival. Once all (or most) participants have reached this phase, the IDMC may not need to meet or they may meet very infrequently. After all, the IDMC can no longer make any recommendations that impact the safety of patients. The Sponsor may still want the IDMC to meet when they are running other trials that use the same treatment.

Special considerations for efficacy

Outcomes in oncology trial are typically overall survival (OS), PFS, or overall response rate (ORR). Depending on the study design, patients often transition to a long-term follow-up phase once they complete treatment or ‘progress’ while on treatment. Consider mortality as the primary outcome. The time between contacts with the patient during this phase of the study may be several months, and deaths may have occurred but have not yet entered the database. How is the IDMC to monitor such a situation? One option is to recommend that the sponsor perform a vital-status sweep before each IDMC meeting to ensure that every patient has been contacted within, say, 30 or 60 days of the meeting. Depending on the size of the study, speed of recruitment, and the nature of the disease (short or long survival times), such a census may be quite difficult to perform if almost every patient must be contacted. Later in the study, after many deaths have occurred, the process is less burdensome. We suggest performing, at a minimum, sweeps in conjunction with each IDMC meeting with pre-specified stopping guidelines.

A PFS outcome also has its challenges. Typically, PFS is based on Response Evaluation Criteria in Solid Tumors (RECIST) (16) criteria which is very difficult to assess with a computer program. To avoid programmatic issues for the reporting statistician, the data center should provide data on progression based on investigator assessment or on the results of an independent review committee. Often both measures are provided, and the results of these two outcomes may be very different. In any case, the IDMC will want to see the degree of concordance between the two measures.

In order to ensure that PFS is as rigorous as possible, at least some analyses should focus on radiologic data rather than on assessments of ‘clinical progression’. If clinical progressions are included, a sensitivity analysis only including radiologic data should be provided.

Unlike survival, which can be assessed continuously, PFS is by nature an interval-censored variable. Progression is usually defined in conjunction with radiologic assessments, perhaps every 6 or 8 weeks early in the study, and less frequently as the study progresses (investigators can also define clinical or symptomatic progression). Therefore, the Kaplan-Meier curves for PFS actually summarize OS, not progression, until the first radiologic assessment.

Statistically, ORR may be the simplest outcome. At each protocol-defined visit, the investigator determines response (complete response, partial response, stable disease, or disease progression). The best of these outcomes over the course of the study is summarized a table. ORR, however, is often not clinically meaningful enough to use as a primary outcome in a phase III clinical trial.

An IDMC faces a difficult challenge when OS and PFS tell different stories. The easier situation occurs when the direction of effect is the same for both outcomes but the magnitudes (or P values) are inconsistent. In this case, one outcome can be considered as supportive of the other. If the new therapy shows longer OS but PFS is worse, the IDMC may not worry much. (Although the IDMC may want to continue the trial thinking that the benefit on survival may be the result the play of chance.)

More problematic is the situation when PFS shows promise but OS does not. In these situations, the IDMC needs to consider carefully the maturity of the data. PFS, by its nature, will have more events and results may be clear earlier. A negative signal in OS should be carefully monitored but may be due to a delayed effect of the treatment. The IDMC should be careful not to recommend stopping a study too early—more frequent monitoring is always an option for a concerned board.

Another problem may arise when participants take other therapies after progression or after stopping active study medication. In some trials, the landscape of available therapies is rapidly changing. In trials with long-term follow-up, even a slowly changing landscape can affect patients. Consider the case where a trial is initiated to compare Drug N (new therapy) to the standard therapy (Drug S). Early in the life of the trial, a third therapy, Drug X, is approved and it is much better than Drug S. If patients progress more rapidly on Drug S, they are more likely to receive Drug X and have longer survival times. In an analysis of OS, this may wash out any benefit when comparing Drug N to Drug S. In these complicated situations the IDMC may need additional analyses to assess the effect of the treatment correctly. These analyses may involve sophisticated statistical modeling.

One final situation to consider is that event-driven trials may take shorter or longer than expected. The first case is not problematic operationally, but may not bode well for the success of the trial. The second can be difficult, especially if the trial takes much longer than expected. If the treatment is working much better than expected, events will accrue considerably more slowly than anticipated. In fact, if there are many long-term survivors, the planned number of events may never be reached within a reasonable timeframe. Because the IDMC has reviewed the interim data, it should not advise the sponsor to stop early—after all, the committee knows that early stopping will lead to a successful outcome. The Sponsor must make the decision on its own on the basis of masked data.

Special considerations for safety

In reviewing safety data in trials of oncology, IDMCs recognize that participants experience many AEs, some arising from the progress of the disease itself and others, often called toxicities, caused by the treatment. Thus, in order for an IDMC to evaluate whether participants in the experimental arm are experiencing an unacceptably large burden of AEs, the IDMC must determine a tolerable level of toxicity and needs to partition the events into those that are disease-related and those that are caused by the experimental treatment. In general, the fact of randomization will allow the IDMC to assume that any excess of events occurring in the experimental as compared to the control group, if not due to chance, is likely to have been caused by the experimental therapy.

Judging whether an observed excess is tolerable requires the IDMC to evaluate the relative frequency of events in the arms of the study and then decide whether the likely benefit of the experimental therapy is worth the increased burden of adverse experiences. In many disease areas, adverse experiences are summarized both in terms of seriousness (serious or not serious) and severity (mild, moderate, severe, life-threatening, or fatal). Seriousness is a regulatory classification; severity a clinical one. Oncologists in clinical practice categorize adverse events in terms of grades. Grade 1 events are mild or asymptomatic and require no intervention. Grade 2 events require minimal intervention and may lead to some limitation of activities. Grade 3 events are severe, but not life-threatening. They may limit patients’ ability to care for themselves. Grade 4 represents life-threatening events that require urgent intervention. Finally, Grade 5 events are those that result in death (17). Because oncologists so commonly use this classification, IDMC reports in oncology should report events not only by seriousness, but also by grade. A simple approach is to include a table of all adverse events and a table of all serious events, with both tables classifying events as grade 1 or 2 and grade 3 or higher. This structure allows the DMC members to evaluate AEs both in the taxonomy with which they are most familiar (grade) and in the format often preferred by regulators (serious or non-serious).

Examples

To fix ideas, consider three examples. None comes from an actual trial, but each example is quite similar to trials that did occur.

The first example comes from an unmasked trial of colon cancer testing whether the new treatment decreased mortality relative to standard therapy. Early in the trial, the IDMC observed excess mortality in the treated group. The committee, although scheduled to meet every 6 months, asked for a safety update 3 months after its first meeting. The excess mortality was even stronger. Worried that the unmasked nature of the study might have led investigators to follow the treated group more intensively and therefore be more quickly aware of the deaths in the treated group, the board asked the investigators to determine the vital status of each participant on a specific date. To preserve the integrity of the study, the IDMC did not describe why it wanted the data; it simply said that it could not responsibly monitor data without accurate information on mortality. The investigators then reported many more deaths; in fact, more deaths had occurred in the control than in the experimental group. This experienced IDMC reacted to an apparent risk, but it recognized the potential bias in the design and, rather than stop the trial prematurely, asked for rapid collection of relevant data.

In another example, an IDMC was monitoring a study on relief of symptoms of bone pain in patients with prostate cancer. The treatment was showing clear benefit on symptoms but excess early mortality in the treated group (20 deaths in the treated group and 8 in the control group; nominal P value =0.025). The sponsor was unwilling to give the IDMC rapid accurate information on baseline factors. The IDMC felt that it had no choice but to recommend stopping the study.

A third trial, this one in small cell lung cancer, had mortality as its primary end point. The IDMC was told to stop only for excess mortality in the treated group. After two-thirds of the data were available, the IDMC saw that almost an identical proportion had died in the two groups (23% in the treated group, 24% in the control group). On the other hand, the data were showing an increase in many AEs (e.g., nausea, vomiting, hand-foot syndrome). The board, judging that the balance of risk and benefit no longer favored benefit, recommended stopping the trial.

Summary and recommendations

In establishing an IDMC, the sponsor and investigators should carefully weigh decisions about the board's structure and function. They should select members on the basis of their expertise. Membership is not a “reward” for good recruitment in other trials. The structure of the meeting should facilitate, not impede, the ability of the IDMC to do its work. A sponsor that spends hours discussing material that the IDMC will be reviewing in closed session wastes valuable time.

The IDMCs should operate under a clear charter, with expectations understood by all members of the committee, the sponsor, and the investigators. Investigators in multicenter trials should review the safety monitoring plans in the trial in which they participate and provide input into the composition and charge of the IDMC.

Sponsors must trust their IDMCs. They must realize that an IDMC takes its responsibilities extremely seriously and that, therefore, sponsors must give the IDMC the tools and the data that it needs to operate effectively in protecting the safety of participants.

Acknowledgements

Disclosure: The authors declare no conflict of interest.

References

- National Institutes of Health. Clinical trial committee guide. Bethesda, MD: National Institutes of Health; 1979, reaffirmed 1998. Available online: http://grants.nih.gov/grants/guide/notice-files/not98-084.html

- Practical Issues in Data Monitoring of Clinical Trials’. Proceedings of a workshop. Bethesda, Maryland, 27-28 January 1992. Stat Med 1993;12:415-616. [PubMed]

- National Institutes of Health. NIH Phase III Clinical Trials Monitoring: A Survey Report, 1995. Available online: https://grants.nih.gov/grants/policy/hs/Clinical_Trials_Monitoring.pdf

- Guidance for Clinical Trial Sponsors – Establishment and Operation of Clinical Trial Data Monitoring Committees. US Department of Health and Human Services, Food and Drug Administration, Center for Biologics Evaluation and Research, Center for Drug Evaluation and Research, Center for Devices and Radiological Health, 2006.

- International Conference on Harmonisation Expert Working Group. International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use. Statistical Principles for Clinical Trials E9. In: ICH Harmonised Tripartite Guidline. Geneva. 1998. Available online:

- International Conference on Harmonisation Working Group. ICH Harmonised Tripartite Guideline: Guideline for Good Clinical Practice E6 (R1). International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use, 1996.

- DAMOCLES Study Group, NHS Health Technology Assessment Programme. A proposed charter for clinical trial data monitoring committees: helping them to do their job well. Lancet 2005;365:711-22. [PubMed]

- Ellenberg SS, Fleming TR, DeMets DL. Data Monitoring Committees in Clinical Trials. A Practical Perspective. Statistics in Practice. John Wiley & Sons, LTD, 2002.

- Herson J. Data and Safety Monitoring Committees in Clinical Trials. CRC Press, 2009.

- Wittes J. Data Safety Monitoring Boards: a brief introduction. Biopharmaceutical Report 2000;8:1-7.

- Meinert CL. Masked monitoring in clinical trials--blind stupidity? N Engl J Med 1998;338:1381-2. [PubMed]

- Snapinn SM. Comment on “Data Safety Monitoring Boards”. Biopharmaceutical Report 2000;8:7-8.

- Green S, Benedetti J, Smith A, et al. Clinical Trials in Oncology, Third Edition. Chapman & Hall/CRC Interdisciplinary Statistics, 2012.

- Good IJ. eds. The Estimation of Probabilities; an Essay on Modern Bayesian methods. MIT Press, 1965.

- Hurwitz H, Fehrenbacher L, Novotny W, et al. Bevacizumab plus irinotecan, fluorouracil, and leucovorin for metastatic colorectal cancer. N Engl J Med 2004;350:2335-42. [PubMed]

- Eisenhauer EA, Therasse P, Bogaerts J, et al. New response evaluation criteria in solid tumours: revised RECIST guideline (version 1.1). Eur J Cancer 2009;45:228-47. [PubMed]

- Common Terminology Criteria for Adverse Events (CTCAE) version 4.0. U.S. Department of Health and Human Services, National Institutes of Health, National Cancer Institute, 2010.