New technological advancements for interventional oncology

Introduction

From the discovery of X-rays in 1895, radiology has always been the most technological specialty of medicine, evolving from purely diagnostic to high-tech interventional.

With the advent of radiomics, diagnosis has progressed to a histological level, while image-guided interventional procedures are becoming less and less invasive, increasingly precise and individually tailored for every patient (1), requiring sophisticated technical support, in order to perform the best possible locoregional therapy for the best selected patient. Radiologists have been strongly involved in these technological developments and are responsible for the evaluation of both strengths and weaknesses of different investigations. Interventional radiologists, in particular, are obliged to master the appropriate integrated imaging algorithms in order to maximize the clinical effectiveness of treatments (2).

In this manuscript we discuss four hot “technological” topics of current interventional radiology: fusion imaging, artificial intelligence (AI), augmented reality (AR) and robotics. Although being recently introduced in clinical practice, they are rapidly becoming part of our daily workflow, and will certainly become essential instruments for the next generations of interventional radiologists.

Fusion imaging

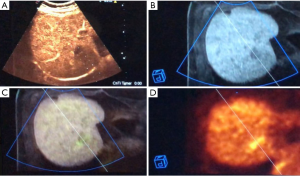

Fusion imaging combines the advantages of two or three different imaging methods, avoiding their disadvantages, displaying coordinated images simultaneously on the same screen (Figure 1). With the help of the position-sensing unit, images move accordingly. Ultrasound (US) is the primary technique for most interventional procedures requiring fusion imaging, as it provides real-time imaging, is radiation-free, easily accessible, and low-cost (3), while computed tomography (CT), magnetic resonance imaging (MRI), or positron emission tomography (PET) are superimposed.

Before current image fusion, the traditional process had been described as “visual registration”, i.e., mental integration of information coming from multiple techniques: prior to performing interventional procedures, radiologists used to examine CT, MRI or PET studies acquired in advance, and tried to mentally superimpose the findings to sonography performed during the procedure. However, this process can be very challenging when the US scanning plane is not precisely axial or longitudinal (as it is for CT, MRI, and PET planes), when breathing induces the displacement of anatomical structures and affects mental co-registration (4) and when air in lungs and bowel makes target visualization very challenging or even impossible. Image fusion is nowadays performed with low-cost electromagnetic technology (generator and detectors applied to US probes and interventional devices) and increasingly simple software systems allowing to use for the co-registration only one scan plane and one anatomic landmark visualizable both on the US and reference modality. Thanks to these peculiarities, it is possible to rapidly achieve (from 2 to 10 minutes) spatial alignment of datasets and perfect co-registration of two or even three imaging modalities simultaneously with millimetric accuracy, thus enhancing speed, safety and favorable outcomes of the procedures (4,5). US can be fused with pre-ablation 3D contrast-enhanced US (CEUS), CT, cone-beam CT (CBCT) (6) and MRI scans or even PET-CT, in this latter case using unenhanced (or contrast-enhanced in the most difficult situations) CT for the co-registration and finally overlapping PET to US and CT (7).

The main purpose of image fusion is to allow precise targeting, during diagnostic (biopsies) or therapeutic (ablations) procedures, of lesions inconspicuously visualized or completely undetectable with sonography for their small size and/or location. In a recent paper, this aim was reached in 95.6% of 295 tumors (162 HCCs and 133 metastases; mean diameter 1.3±0.6 cm, range, 0.5–2.5 cm) detectable on contrast-enhanced CT (CECT)/MRI, but completely undetectable with unenhanced US and either totally undetectable or incompletely conspicuous with CEUS (8). More recently, complete microwave ablation was achieved in 82.1% of liver metastases 2.0±0.8 cm in size, extremely challenging or totally undetectable with US, CEUS and CECT (due to small size, poor acoustic window, lack of contrast enhancement etc.) and adequately visualized only by PET/CECT, with needed registration time ranging from 2.0 to 8.0 minutes and with complication rate identical to that of conventional procedures (7).

A novel co-registration method of fusion imaging has been recently described, including pre-ablation CECT and post-ablation CBCT, that facilitates intraprocedural ablation assessment (9). Although CT and CBCT are both based upon radiation and CT, their datasets differ substantially about the field-of-view, speed of rotation, resolution and radiation dose. With this new protocol, 38 liver nodules divided among HCC and metastases were co-registered. In 12/38 ablations, the intraprocedural CBCT showed residual unablated tumor, that was confirmed by post-procedural CECT in those 4 cases in which the unablated volume was >20% of the initial tumor volume. Given earlier visual identification of residual tumor post-ablation, this method potentially decreases the risk of retreatment of partially ablated tumors.

Image fusion can be particularly useful also for diagnostic and therapeutic interventional procedures of small and/or inconspicuously visible renal masses (10). New and recent fields of application of fusion imaging technology are pancreatic interventions, like drainage placement guidance after pancreatitis abscessualization (11), and the assistance of endovascular treatments and follow-up (12).

New technological advancements in image fusion are currently being developed. The most promising and clinically interesting are the fully automatic co-registration and the synchronization of patient’s respiration on both US and reference imaging modality. This latter is achieved by positioning one more electromagnetic detector on patient’s thorax and registering the respiratory phases. Subsequently, two fine-tunings are performed, one in inspiration and one in expiration, in order to achieve the simultaneous visualization of patient breathing with real-time US and pre-acquired reference imaging modality (CECT, MRI, CBCT).

AI

AI is a fast-growing area of informatics and computing, highly relevant for radiology (13).

AI involves computers able to perform tasks that have always required human intelligence; in other words, it is the ability of a computer to think and learn (14). In everyday life, AI has led to significant advances ranging from web search, touchless phone commands and self-driving vehicles to language processing and computer vision—tasks that could only be done by humans until a few years ago (15).

AI is composed of machine learning (ML) and deep learning (DL) with convolutional neural networks (CNN).

ML includes all those approaches that allow computers to learn from data without being explicitly programmed for, and has already been applied to medical imaging extensively (16). ML-based algorithms perform intelligent predictions based on large data sets, consisting of millions of unique data points (17). However, within the field of Radiology, AI always refers to the more advanced components of CNN and DL (14).

Neural nets mimic their biological counterparts, transferring data through a web of nodes organized in interconnected layers. Data is multiplied by a series of different weights between each node, until a final layer will propose the answer requested. The critical component in a neural network is training, to find the best weights for mappings between nodes (17).

CNN applied to radiology consist of a variant of nets where the first few layers compare each part of an image against several, smaller sub-images. Each node holds some features of the previous one and its output to the next layer depends on how much a part of the image resembles that feature. CNN act mimicking the behavior of the brain cortex, which contains complex structures of cells sensitive to small regions of the visual field (16), detecting a signal from the receptive field, processing it, and transferring the elaborated result to the next layer (18).

Decision-trees are classical tools for layers management. A decision tree classifies items by posing a series of questions about the intrinsic features associated with the items themselves. Every node contains a question, and every node points to another one for each possible answer to its question. Decision trees are applied to classify previously unseen examples, and if they are trained on high quality data, can make very accurate predictions (19).

In the random forests approach, many different decision trees are grown and combined by a randomized tree-building algorithm. In this case, a modified training set is produced of an equal size to the original, but with some training items included more than once. Moreover, when choosing the question at each node, only a small, random subset of the features is considered. With these two modifications, each run results in a slightly different tree (19).

Otherwise, DL may discover features on its own, without labeling; compared to ML, DL needs larger datasets, with the caveat that it also provides network output with the decision process being non-transparent (hidden layers).

Recent advancements in algorithms and computing power, especially for graphics processing units, have allowed great layer depth for AI systems. Indeed, as the number of layers increases, more complex relationships can be achieved (18).

In this setting, convolution operations are performed to obtain feature maps in which the main characteristics of each pixel/voxel are calculated as the sum of the original pixel/voxel’s plus its neighbors’, weighted by convolution matrices (kernels). Moreover, different kernels can be used for specific tasks, such as image blurring, sharpening, or edge detection (16).

The AI application to medical images has generated high anxiety among radiologists about the imminent extinction of the specialty (13). In our opinion, the role of radiologists will not be reduced to simple image analysts. We have been on the forefront of the medical digital era, being the first physicians to adopt computers in the daily workflow, and now we are probably the most digitally informed healthcare professionals. Nonetheless, AI will strengthen our importance only if we will be able to embrace this technology, that helps avoiding monotonous and routinely tasks, and educate new generations how to use it properly. AI could also help radiologists feel less worried about the overload of examinations to be reported, rather focusing on more clinical tasks such as communication with patients and interaction with multidisciplinary teams.

The traditional approach has always accounted for trained physicians that visually assess radiological images, detect and report findings, characterize and monitor diseases. The assessment is, by its nature, subjective and based on the radiologist experience. In contrast to such qualitative reasoning, AI excels at recognizing complex patterns in imaging data and can provide quantitative assessments in an automated fashion (15).

The major fields of Radiology receiving support from AI are MRI and neuroradiology (16); nonetheless, interventional radiology can take advantage of the new wind of AI.

Firstly, interventional radiologists will benefit from a more precise diagnosis. Indeed, CNN has been recently used to classify autonomously hepatic nodules on CT with promising results (20), and in the future we may integrate MRI as the data input, with a higher level of soft-tissue contrast resolution and three-dimensional volumetric evaluation (18).

ML will be capable of improving patient selection for interventional procedures, as well. For example, recent studies documented the feasibility of predicting hepatocellular carcinoma response to transarterial chemoembolization (TACE) (21,22). Many variables contribute to TACE response; consequently, a large series of clinical, laboratory, demographic, and imaging features were assembled and tested to correlate with patient’s response to treatment. Extensively, such mechanisms will likely identify responders before performing all range of locoregional therapies, sparing unnecessary treatments in those patients who wouldn’t take any advantage.

AI can be used for predicting tumor recurrences after percutaneous treatments as well. The assessment of ablation completeness is often challenging, due to the edema adjacent to the ablation volume, which may conceal minimal, residual tumor. A recent manuscript described the application of an AI-based software (AblationFit, R.A.W. s.r.l., Italy) for segmentation, rigid and non-rigid co-registration and volume analysis of pre-ablation and post-ablation imaging. It aims at the precise assessment of ablation completeness, the exact definition of ablative margins in 3D and the prediction of occurrence and exact location of local tumor progression on follow-up (23). In a population of 90 HCCs in 50 patients, retrospectively examined, the software was able to “retrospectively predict” incomplete tumor treatment (undetected at the time of the CT study by visual control) in 76.5% of the HCCs which actually had tumor progression on follow-up, with a sensitivity of 62% and a specificity of 94%. In addition, all these local progressions occurred in the exact location where the residual non-ablated tumor was identified by Ablation-fit.

Lastly, from the analysis of recent literature, it seems plausible that AR will be a primary future trend for AI in interventional radiology. AR, defined as an interactive experience of a real-world environment where objects of the real-world are enhanced by computer-generated perceptual information, can be applied to interventional purposes precisely overlapping the real patient with his/her 3D imaging reconstruction from CT or MRI scans (24-26). Through the combination of a customized needle handle with markers glued on top, attached to an interventional device, radiopaque tags applied on patient’s skin before performing the reference CT scans and goggles worn by the operator, it is possible to display the AR (3D-CT scans) superimposed upon the visualized background of the interventional procedure (i.e., the patient). When the distance between the tip of the interventional device (needle, probe, etc.) and the geometric center of the target is 0, the device is properly positioned at the center of the intended target.

AR is yet reported as a valid teaching instrument for young radiologists approaching interventional procedures, as well as an accurate probe-placement guidance for thermal ablation or vertebroplasties (27), but its future fields of applications will be nearly infinite.

Robotics

In the last decades, medicine has moved from open procedures and non-targeted medications to tailored, minimally invasive therapies aiming to treat complex diseases. Therefore, young doctors choosing a career in interventional radiology are about to experience a future full of developments. We have already discussed the promises of AI and fusion imaging for improving the ability of the radiologist to diagnose and treat beyond what the eyes can see; in order to increase the safety and accuracy of targeted procedures, the next big step could be robotics (28).

According to the Robotic Institute of America, a robot is “a reprogrammable, multifunctional manipulator designed to move materials, parts, tools, or other specialized devices through various programmed motions for the performance of a variety of tasks” (29).

There are several different types of robots already applied to interventional radiology. Navigational robots, for instance, offer the radiologist reliable trackability and maneuverability during percutaneous or endovascular interventions (5). As an example, relevant preliminary results in terms of radiation exposure were demonstrated for cone-beam CT-guided needle deployment in phantoms (30), with a comparable accuracy between manual and automated targeting.

Robots might also be used to assist peripheral vascular interventions, aiming to limit the occupational hazards associated with radiation exposure. Behind a radiation-shielded remote workstation, the interventional radiologist can perform arterial revascularizations controlling catheters and guidewires with only two joysticks (31).

Due to higher soft-tissue contrast and detailed three-dimensional view, MRI-guided procedures allow more precise targeting of abdominal lesions, if compared to CT-guidance. MRI-compatible materials are already employed for interventional radiology procedures, such as thermal ablations, and MRI can be favorable to monitoring thermal distribution in real-time, leading to a decrease of healthy tissue damage around the targeted lesion (32). In the near future, robot-assisted MRI interventional procedures could become a reality, as already happened for cerebral and prostate surgery (33).

Nonetheless, the use of a robot for interventional procedures can imply some limitations: since the anatomy is recognized upon coordinates, a highly accurate calibration must be performed before the procedure. Moreover, as happens for fusion imaging, organ movement (due to respiration, for example) can be disadvantageous; some systems can already compensate for breathing or minimal patient movements, but the recognition should be fast and the recalibration accurate. Some other issues worthy of care comprehend the lack of force feedback, especially in robotic systems that include an active needle deployment, the length of operator training and robot cost-effectiveness (29).

Conclusions

The modern interventional radiologist is involved in the development of new technologies, aiming at performing tailored and effective therapies. New instruments such as fusion imaging, AI, AR and robotics will become essential tools in the years to come, with almost unlimited applications. However, some aspects and limitations must be carefully evaluated, and the efficacy of all these novelties needs to be proven with structured and prospective studies.

Acknowledgments

None.

Footnote

Conflicts of Interest: The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

References

- Stern SM. Technology in radiology: advances in diagnostic imaging & therapeutics. J Clin Eng 1993;18:425-32. [PubMed]

- European Society of Radiology. 2009. The future role of radiology in healthcare. Insights Imaging 2010;1:2-11. [Crossref] [PubMed]

- Wang SY. Real-Time Fusion Imaging of Liver Ultrasound. J Med Ultrasound 2017;25:9-11. [Crossref] [PubMed]

- European Society of Radiology (ESR). Abdominal applications of ultrasound fusion imaging technique: liver, kidney, and pancreas. Insights Imaging 2019;10:6. [Crossref] [PubMed]

- Chehab MA, Brinjikji W, Copelan A, et al. Navigational tools for interventional radiology and interventional oncology applications. Semin Intervent Radiol 2015;32:416-27. [Crossref] [PubMed]

- Monfardini L, Gennaro N, Della Vigna P, et al. Cone-Beam CT-Assisted Ablation of Renal Tumors: Preliminary Results. Cardiovasc Intervent Radiol 2019;42:1718-25. [Crossref] [PubMed]

- Mauri G, Gennaro N, De Beni S, et al. Real-Time US-18FDG-PET/CT Image Fusion for Guidance of Thermal Ablation of 18FDG-PET-Positive Liver Metastases: The Added Value of Contrast Enhancement. Cardiovasc Intervent Radiol 2019;42:60-8. [Crossref] [PubMed]

- Mauri G, Cova L, De Beni S, et al. Real-time US-CT/MRI image fusion for guidance of thermal ablation of liver tumors undetectable with US: results in 295 cases. Cardiovasc Intervent Radiol 2015;38:143-51. [Crossref] [PubMed]

- Solbiati M, Passera KM, Goldberg SN, et al. A Novel CT to Cone-Beam CT Registration Method Enables Immediate Real-Time Intraprocedural Three-Dimensional Assessment of Ablative Treatments of Liver Malignancies. Cardiovasc Intervent Radiol 2018;41:1049-57. [Crossref] [PubMed]

- Amalou H, Wood BJ. Multimodality Fusion with MRI, CT, and Ultrasound Contrast for Ablation of Renal Cell Carcinoma. Case Rep Urol 2012;2012:390912. [Crossref] [PubMed]

- Zhang H, Chen GY, Xiao L, et al. Ultrasonic/CT image fusion guidance facilitating percutaneous catheter drainage in treatment of acute pancreatitis complicated with infected walled-off necrosis. Pancreatology 2018;18:635-41. [Crossref] [PubMed]

- Joh JH, Han SA, Kim SH, et al. Ultrasound fusion imaging with real-time navigation for the surveillance after endovascular aortic aneurysm repair. Ann Surg Treat Res 2017;92:436-9. [Crossref] [PubMed]

- European Society of Radiology (ESR). What the radiologist should know about artificial intelligence - an ESR white paper. Insights Imaging 2019;10:44. [Crossref] [PubMed]

- Pakdemirli E. Artificial intelligence in radiology: friend or foe? Where are we now and where are we heading? Acta Radiol Open 2019;8:2058460119830222. [Crossref] [PubMed]

- Hosny A, Parmar C, Quackenbush J, et al. Artificial intelligence in radiology. Nat Rev Cancer 2018;18:500-10. [Crossref] [PubMed]

- Pesapane F, Codari M, Sardanelli F. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp 2018;2:35. [Crossref] [PubMed]

- Nichols JA, Herbert Chan HW, Baker MAB. Machine learning: applications of artificial intelligence to imaging and diagnosis. Biophys Rev 2019;11:111-8. [Crossref] [PubMed]

- Letzen B, Wang CJ, Chapiro J. The role of artificial intelligence in interventional oncology: A primer. J Vasc Interv Radiol 2019;30:38-41.e1. [Crossref] [PubMed]

- Kingsford C, Salzberg SL. What are decision trees? Nat Biotechnol 2008;26:1011-3. [Crossref] [PubMed]

- Yasaka K, Akai H, Abe O, et al. Deep Learning with Convolutional Neural Network for Differentiation of Liver Masses at Dynamic Contrast-enhanced CT: A Preliminary Study. Radiology 2018;286:887-96. [Crossref] [PubMed]

- Abajian A, Murali N, Savic LJ, et al. Predicting Treatment Response to Intra-arterial Therapies for Hepatocellular Carcinoma with the Use of Supervised Machine Learning-An Artificial Intelligence Concept. J Vasc Interv Radiol 2018;29:850-7.e1. [Crossref] [PubMed]

- Morshid A, Elsayes KM, Khalaf AM, et al. A machine learning model to predict hepatocellular carcinoma response to transcatheter arterial chemoembolization. Radiology Radiol Artif Intell 2019;1:e180021. [PubMed]

- Solbiati M, Muglia R, Goldberg SN, et al. A novel software platform for volumetric assessment of ablation completeness. Int J Hyperthermia 2019;36:337-43. [Crossref] [PubMed]

- Solbiati M, Passera KM, Rotilio A, et al. Augmented reality for interventional oncology: proof-of-concept study of a novel high-end guidance system platform. Eur Radiol Exp 2018;2:18. [Crossref] [PubMed]

- Das M, Sauer F, Schoepf UJ, et al. Augmented reality visualization for CT-guided interventions: system description, feasibility, and initial evaluation in an abdominal phantom. Radiology 2006;240:230-5. [Crossref] [PubMed]

- Uppot RN, Laguna B, McCarthy CJ, et al. Implementing virtual and augmented reality tools for radiology education and training, communication, and clinical care. Radiology 2019;291:570-80. [Crossref] [PubMed]

- Auloge P, Cazzato RL, Ramamurthy N, et al. Augmented reality and artificial intelligence-based navigation during percutaneous vertebroplasty: a pilot randomised clinical trial. Eur Spine J 2019. [Epub ahead of print]. [Crossref] [PubMed]

- Kassamali RH, Ladak B. The role of robotics in interventional radiology: current status. Quant Imaging Med Surg 2015;5:340-3. [PubMed]

- Cleary K, Melzer A, Watson V, et al. Interventional robotic systems: applications and technology state-of-the-art. Minim Invasive Ther Allied Technol 2006;15:101-13. [Crossref] [PubMed]

- Pfeil A, Cazzato RL, Barbé L, et al. Robotically Assisted CBCT-Guided Needle Insertions: Preliminary Results in a Phantom Model. Cardiovasc Intervent Radiol 2019;42:283-8. [Crossref] [PubMed]

- Mahmud E, Schmid F, Kalmar P, et al. Feasibility and safety of robotic peripheral vascular interventions: results of the RAPID trial. JACC Cardiovasc Interv 2016;9:2058-64. [Crossref] [PubMed]

- Zhu M, Sun Z, Ng CK. Image-guided thermal ablation with MR-based thermometry. Quant Imaging Med Surg 2017;7:356-68. [Crossref] [PubMed]

- Hata N, Moreira P, Fischer G. Robotics in MRI-Guided Interventions. Top Magn Reson Imaging 2018;27:19-23. [Crossref] [PubMed]